2 minutes

Paper Summary: COMET (Knowledge Graph Construction)

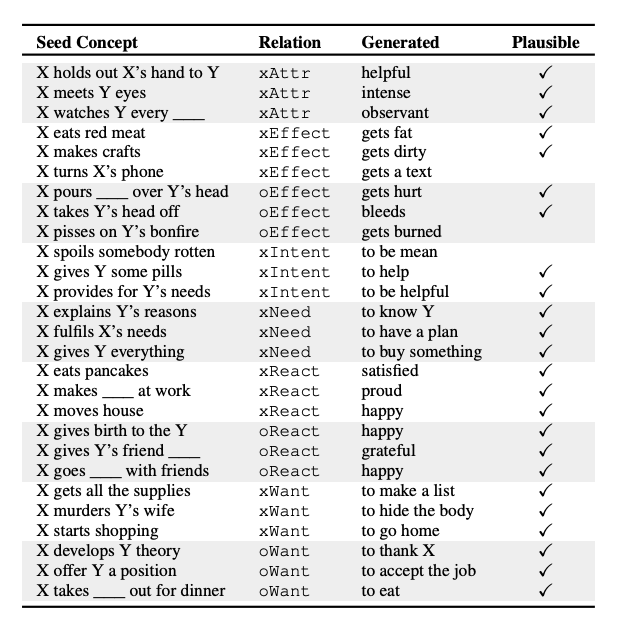

Selected {subject, relation, object} tuples generated by COMET

Paper link: https://arxiv.org/abs/1906.05317

This paper describes COMET, a method of generating knowledge bases automatically. Previous work largely focused on encyclopedic knowledge, which has well-defined relationships. This paper, however, focuses on commonsense knowledge. In this paper the authors introduce a “commonsense transformer” which trains on a knowledge base consisting of tuples and a pre-trained language model. Their trained model generates new nodes in the knowledge graph and completes phrases based on edges in the existing graph.

Training

The training data consists of tuples in the form of {subject, relation, object}, and the task is to generate the object given the subject and relation. The model architecture was the Vaswani GPT. The architecture is fairly straightforward for a transformer. The only difference of note is the representation of the knowledge tuple as a concatenated sequence of the words of each item of the tuple, along with a position embedding.

To train, the researchers train COMET with Atomic and ConceptNet as knowledge seed sets. The Atomic dataset contains 877k tuples of social commonsense knowledge. Experiments with Atomic used bleu-2 as an automatic evaluation metric. The researchers also used Amazon Mechanical Turk to identify whether the generated responses were reasonable.

The researchers also tested on ConceptNet. Relations were in the same {subject, relation, object} format. This dataset was evaluated using perplexity on the test set and accuracy of the generated positive samples on the pre-trained Bilinear AVG model.

Results

Atomic experiments showed that the model is able to generate coherent knowledge even when only 10% of the data in a domain is available. The researchers found that 91.7% of the greedily decoded ConceptNet examples were correct. The model seems to be producing fairly good knowledge tuples. However, sometimes the generated tuples are merely simplifications of previous knowledge.